Neuromorphic Engineer: Bridging Devices, Circuits, Algorithms and Applications

I design and translate across the neuromorphic ecosystem, connecting physical devices and mixed-signal circuits with spiking neural networks, event-based algorithms, applications, and commercial strategy.

- Mixed-signal VLSI for neuromorphic computing

- Event-based vision - from prototype to adoption

- Flexible/organic electronics for neuromorphic circuits

- Strategy, partnerships and real-world deployment

About

I’m a neuromorphic engineer with hands-on experience from transistor-level design through to real-world deployment.

My career has spanned chip design, computer vision, AI, and flexible electronics, always centred on how we can build intelligent systems that work efficiently in the physical world.

I designed and fabricated mixed-signal spiking network chips, then developed neural-prosthetic hardware in post-doctoral research. Later, I led a start-up bringing event-based vision sensors to market, where I combined circuit design with customer liaison, helping many companies explore what neuromorphic sensing could do for them and securing codevelopment and funding partnerships.

I then worked on cloud-based AI, building pipelines for natural language processing using the proto-LLMs of 2018 and graph-based data models.

Since then I’ve collaborated with start-ups applying neuromorphic technology, helping them define architectures and build partnerships. These projects have kept me close to the evolving industrial landscape of event-based vision and spiking computation.

In parallel, I’ve been pursuing blue-sky research into flexible electronics and tactile sensing. That work continues to connect neuromorphic principles with emerging device platforms, from printed and organic transistors to new kinds of robotic skin.

Across all of this, I’ve moved comfortably between devices, circuits, algorithms and applications. That perspective helps me connect specialists who rarely meet: materials scientists and IC designers, embedded engineers and machine-learning researchers, academic labs and industrial R&D teams.

I can help you translate ideas across these boundaries, whether that means designing or reviewing hardware, shaping neuromorphic architectures, or guiding applications from lab to field.

Projects

Selected publications

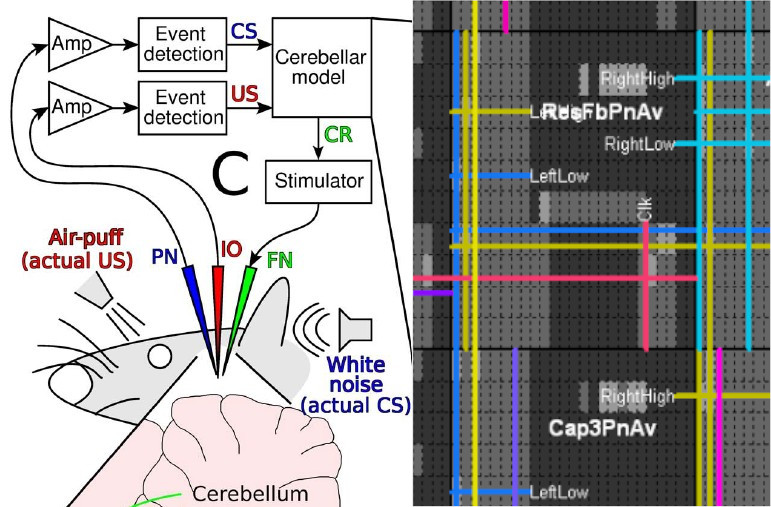

- Hogri R, Bamford SA, Taub AH, Magal A, Del Giudice P, Mintz M. “A neuro-inspired model-based closed-loop neuroprosthesis…” Scientific Reports (2015). pdf

- Bamford SA, Hogri R, Giovannucci A, et al. “A VLSI field-programmable mixed-signal array …” IEEE TNSRE (2012). pdf

- Bamford SA, Murray AF, Willshaw DJ. “STDP with weight dependence evoked from physical constraints.” IEEE TBioCAS (2012). pdf

- Bamford SA, Murray AF, Willshaw DJ. “Large Developing Receptive Fields …” IEEE TNN (2010). pdf

- Moeys D, Corradi F, Li C, Bamford S, et al. “A Sensitive DAVIS sensor …” IEEE TBioCAS (2018). pdf

- De Souza Rosa L, Dinale A, Bamford S, et al. “High-Throughput Asynchronous Convolutions …” EBCCSP (2022). pdf

- Glover A, Dinale A, De Souza Rosa L, Bamford S, Bartolozzi C. “luvHarris …” IEEE TPAMI (2021). pdf

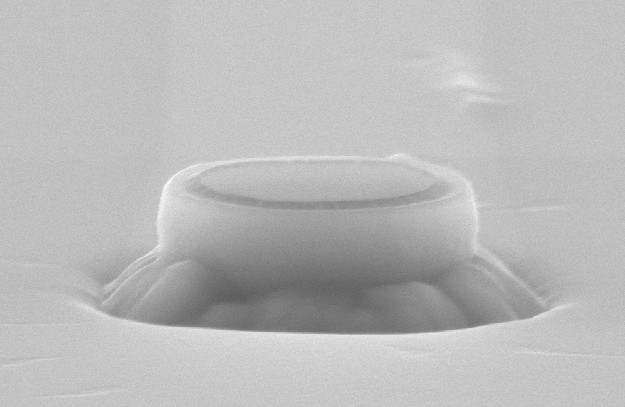

- Hosseini et al. “An organic spiking artificial neuron …” npj Flexible Electronics (accepted). pre-print

Full list on Google Scholar.